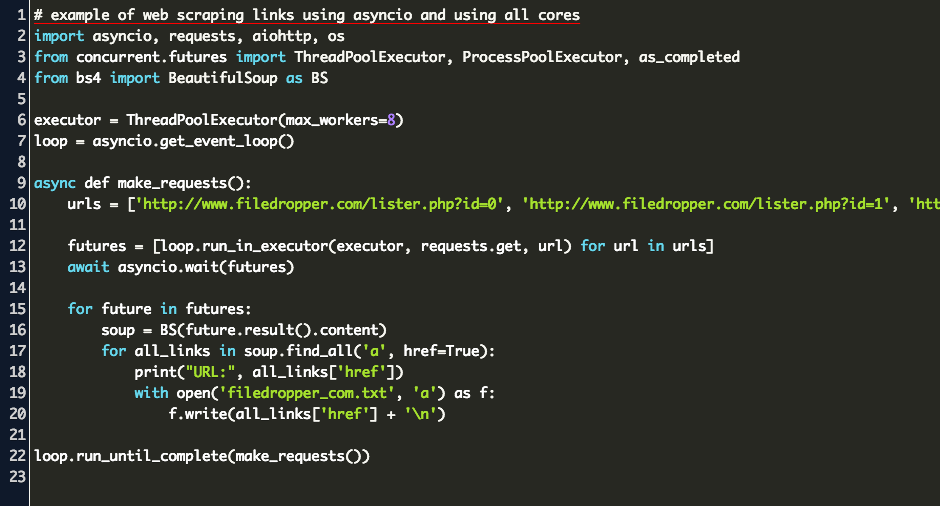

Web scraping is the technique to extract data from a website.

There is a simpler way, from my pov, that gets you there without selenium or mechanize, or other 3rd party tools, albeit it is semi-automated. Basically, when you login into a site in a normal way, you identify yourself in a unique way using your credentials, and the same identity is used thereafter for every other interaction, which is stored in cookies and headers, for a brief period of time. Selenium versus BeautifulSoup for Web Scraping. Prints all the links from a website with specific element (for example: python) mentioned in the link.

The module BeautifulSoup is designed for web scraping. The BeautifulSoup module can handle HTML and XML. It provides simple method for searching, navigating and modifying the parse tree.

Related course:

Browser Automation with Python Selenium

Get links from website

The example below prints all links on a webpage:

It downloads the raw html code with the line:

A BeautifulSoup object is created and we use this object to find all links:

Extract links from website into array

To store the links in an array you can use:

Function to extract links from webpage

Web Scraping Using Beautifulsoup Example

If you repeatingly extract links you can use the function below:

Web Scraping Using Python Beautifulsoup Example

Related course:

Browser Automation with Python Selenium